This slideshow requires JavaScript.

I have learned so much this semester, it’s hard to know where to begin. But I guess I need to begin with metadata and taxonomy. Early in the semester I posted that “I read some articles this week that made me realize that much of what added value librarians provide to collections is in the form of metadata. I guess I always thought of librarians mainly as reference librarians or subject specialists — not as experts in classifying and indexing information.” But now I know (a little) about the value of good metadata, and taxonomies, and I’ve learned about metadata standards such as Dublin Core, that help to standardize metadata usage over the entire web. I’ve learned about the semantic web, and the idea of linking data and building ontologies that describe the relations between concepts. I’ve learned about the contrasting benefits of controlled vocabularies versus “folksonomy” (i.e., tagging). And I’ve learned a little about harvesting metadata, using PKPHarvester to harvest metadata from several databases and data providers.

I’ve also learned more about the Open Access movement, and open access initiatives; and about the issues of “freeing” information from behind paywalls. The main obstacle to this is that knowledge (and its associated data) is a currency that has value, and that making it freely available will necessitate basic structural changes in academia and in academic publishing.

Those structural changes include major changes in the role of the library and librarians in the production and preservation of knowledge. These changes present sigificant challenges to libraries in managing, curating and preserving digital materials and data. Librarians are increasingly expected to have the technical skills to design and select Content Management Systems for their libraries, to design, create, and maintain digital collections and digital repositories, and to train other librarians to do the same, often with limited technical staff and limited budgets. Open Source software is a boon to the small library or non-profit or museum that needs these types of functionality; but again that requires technical knowledge and skill on the part of the librarian to install, configure, and maintain operating systems and small in-house servers.

In order to gain those technical skills, I learned how to create several virtual machines with linux stacks of various sorts, and to install and configure four different content management/digital repository software systems (Drupal, DSpace, Eprints, and Omeka). I created a sample digital collection in each one, and used the experience to compare each system’s strengths and weaknesses, and then to decide which sorts of digital collections (and environments) each system is best suited for. I then chose which system to use to host my digital collection, set up the system, entered the records and created the metadata, and then wrote a paper on the process, which will contribute toward my digital portfolio. In my case, I decided upon Drupal as the system I want to use for my digital collection. Drupal has a steep learning curve, and I really learned a lot about Drupal in a short time through the process of designing my collection, downloading and installing extra modules to provide the functions I needed, and troubleshooting the installation. I’m proud of how well my prototype digital collection works, but I already have plans to keep working on the prototype to get it working even better, and to extend its functions, and to redesign certain features. I’m turning into a Drupal geek already.

Librarians are also expected to conduct outreach to their various communities, in order to make the services of the library more accessible and useful. This seems to be especially needful for the humanities scholar community. We read many articles about the obstacles that keep humanities scholars from embracing digital initiatives, and from using digital resources (and those articles confirmed my own observations). We learned about how humanities scholarship, data, and workflows are vastly different than those of the scientific community; identified some of the obstacles that prevent humanities scholars from using (or producing) digital resources, including digital repositories; and read about several digital humanities initiatives, both in the U.S. and in Europe.

I think one of the most enjoyable aspects of the course for me was just the chance to see so many different examples of digital collections; to interact with my fellow students over their collections and interests; and to explore what is already being done, what is possible and useful. A find that was very helpful to me was the UK Reading Experience Database (RED). This is a database that contains much of the same sorts of data that I wanted to collect in my own digital collection, so it gave me some assurance that I was on the right track with my ideas.

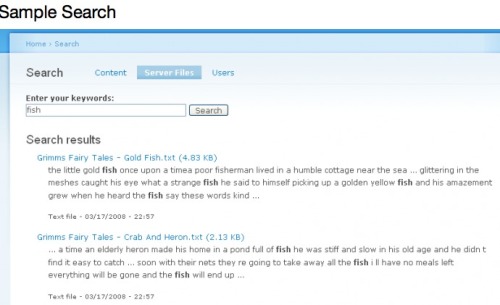

Finally, I have included a slideshow of some screen shots from my project.